Projects Update 1 (Mar 2023)

This month, we have two weeks of projects to share with you. This week, we focus on the Unreal film projects we found. The breadth of work folks are creating with this toolset is astounding – all these films highlight a range of talent, development of workflows and the accessibility of the tools being used. The films also demonstrate what great creative storytelling talent there is among the indie creator communities across the world. Exciting times!

NOPE by Red Render

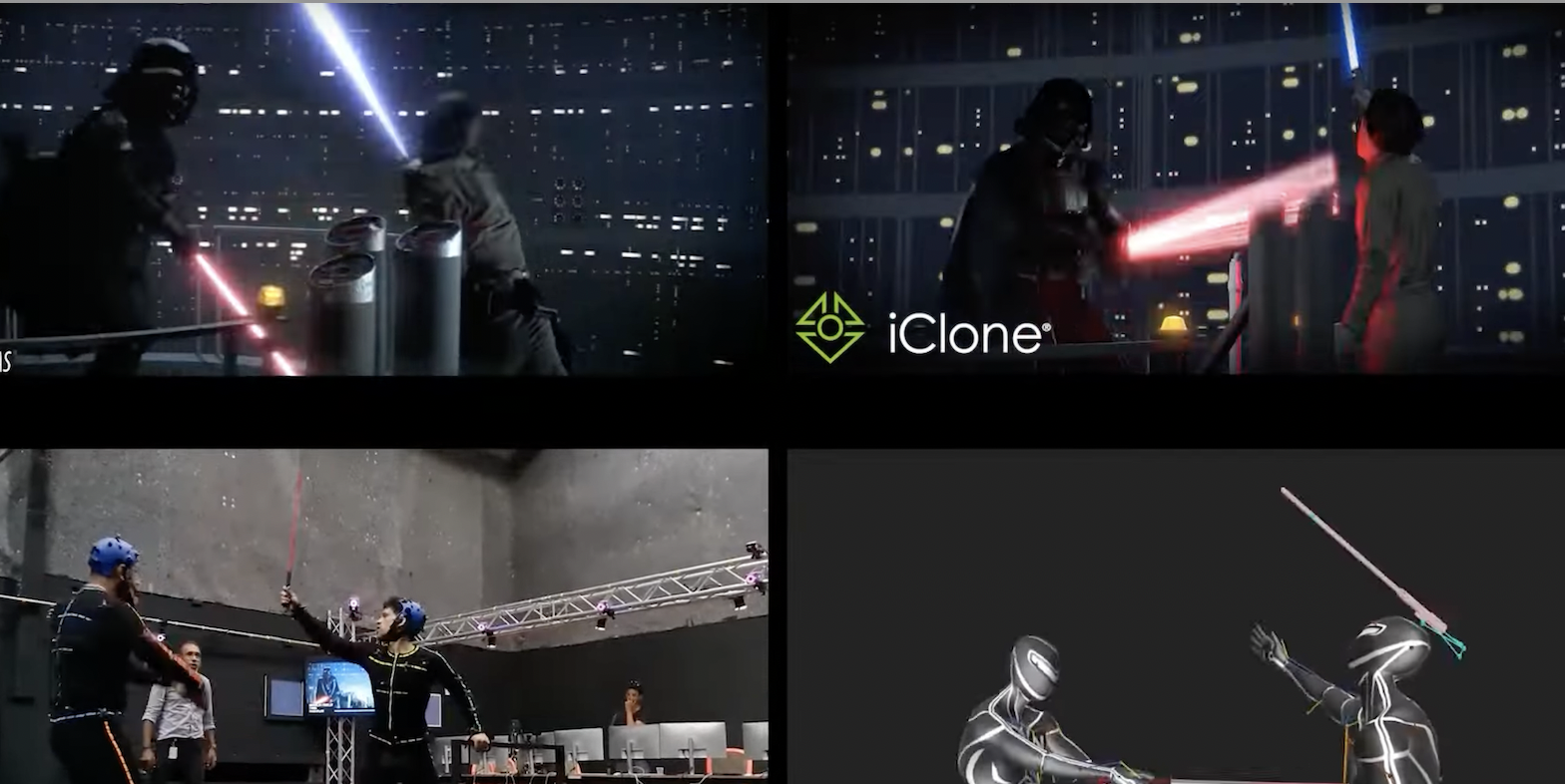

Alessio Marciello’s (aka Red Render) film NOPE uses UE5, Blender and iClone 8 to create a Jordan Peel inspired film, released on 11 December 2022. The pace and soundscape are impressive, the lucid dream of a bored schoolboy is an interesting creative choice, and we love the hint of Enterprise at the end! Check it out here –

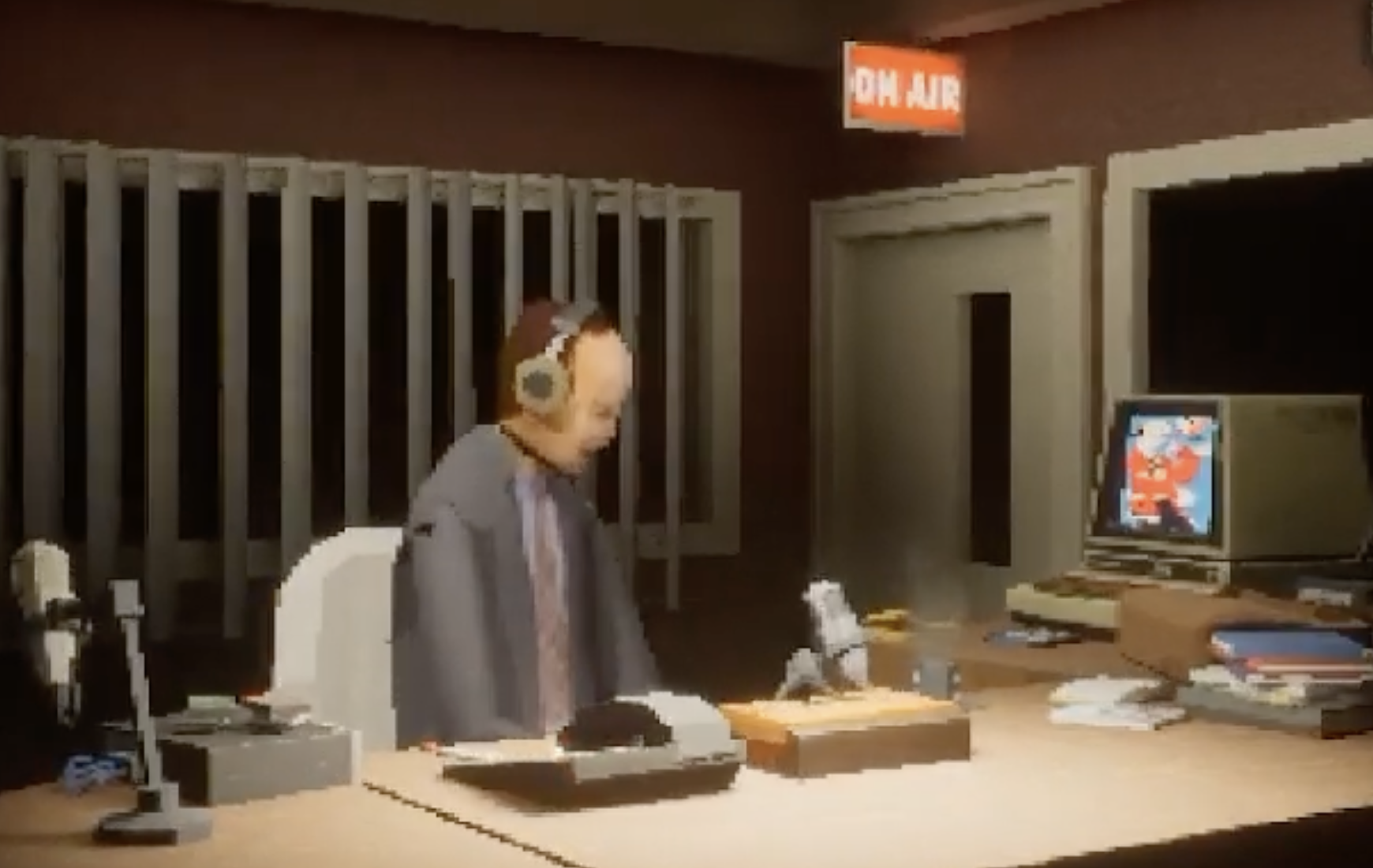

The Perilous Wager by Ethan Nester

Ethan Nester’s The Perilous Wager, released 28 November 2022, uses UE’s Metahumans in our next short project pick. This is reminiscent of film noir and crime drama, mixed with a twist of lime. Its a well managed story with some hidden depths, only really evidenced in the buzzing of flies. It ends a little abruptly but, as its creator says, its about ideas for larger projects. It demonstrates great voice acting and we also love that Ethan voiced all the characters himself, he said using Altered.AI to create vocal deepfakes. He highlights how going through the voice acting process helped him improve his acting skills too – impressive work! We look forward to seeing how these ideas develop in due course. Here’s the link –

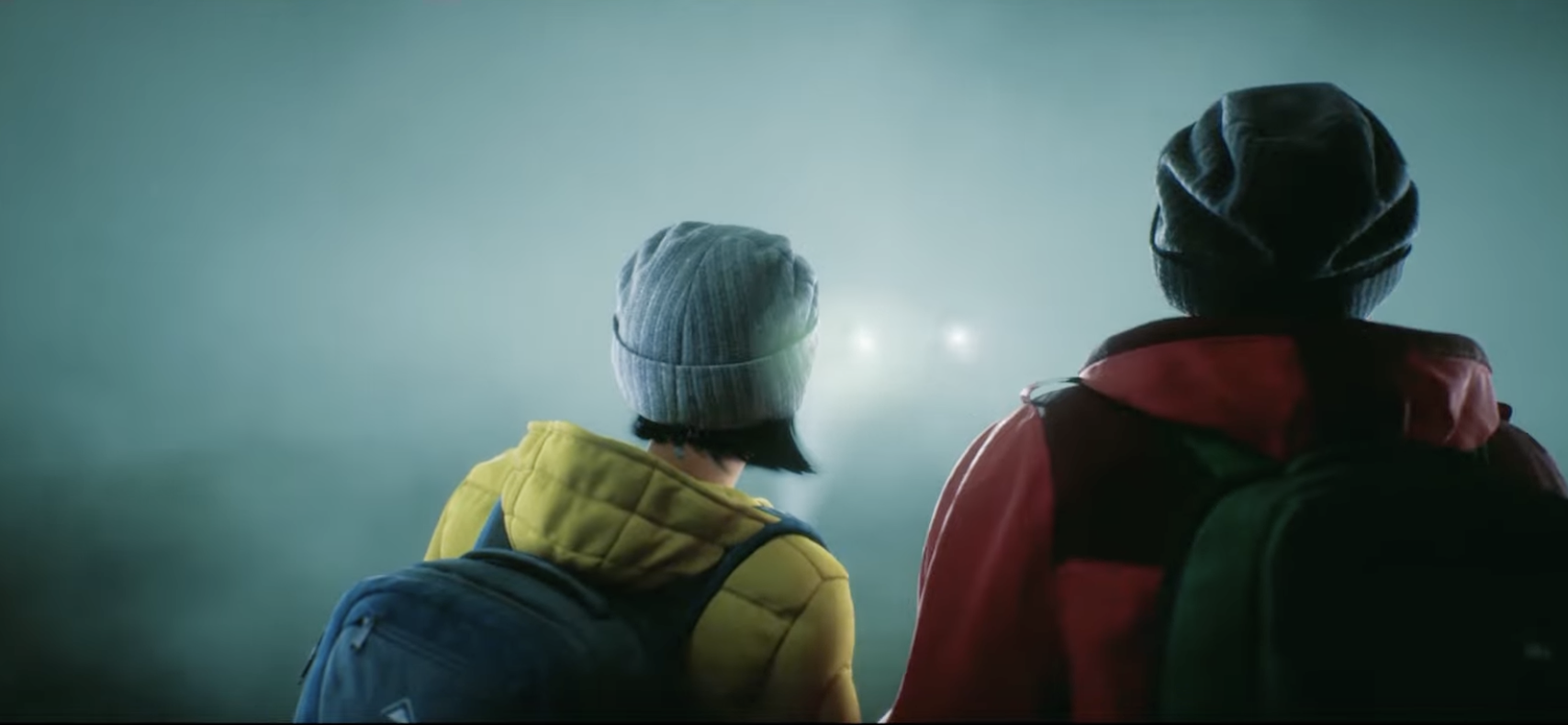

Gloom by Bloom

Another dark and moody project (its also our feature image for this post), Gloom was created for the Australia and New Zealand short film challenge 2022, supported by Screen NSW and Epic. The film is by Bloom, released 17 December 2022, and was created in eight weeks. The sci-fi concept is great, voice acting impressive and the story is well told with some fab jumpscares in it too. The sound design is worth taking note of but we recommend you wear a headset to get the full sense of the expansive soundscape the team have devised. Overall, a great project and we look forward to seeing more work from Bloom too –

Adarnia by Adarnia Studio

Our next project is one that turns UE characters into ancient ones – a slightly longer format project, this has elements of Star Wars, Blade Runner and just a touch of Jason and the Argonauts mixed together with an expansive cityscape to boot. Adarnia is a sci-fi fantasy created by Clemhyn Escosora and released 19 March 2021. There’s an impressive vehicle chase which perhaps goes on just a little too long, but there’s an interesting use of assets that are replicated in different ways across the various scenes that is brought together nicely towards the end of the film. The birdsong is a little distracting in places, one of those ‘nuisance scores’ we highlighted in last week’s blog post (Tech Update 2). There’s clearly a lot of work that’s gone into this, and pehaps there’s scope for a game to be made with the expansiveness demonstrated in the project, but the film’s story needs to be just a little tighter. We guess the creators agree because their YouTube channel is full of excerpts focussing on key components of this work. Check out the film here –

Superman Awakens by Antonis Fylladitis

Our final project for this week is a Superman tale, created by VFX company called Floating House. The film, released on 13 February 2023, is inspired by Kingdom Come Superman and Alex Ross and is a very interesting mix of comic styling and narrative film, with great voice acting from Daniel Zbel. Its another great illustration of the quality of the UE assets for talented storytellers –

Next week, we take a look at films made with other engines.

Recent Comments