Fests & Contests Update (Nov 2022)

There are a growing number of ‘challenges’ that we’ve been finding over the last few months – many are opportunities to learn new tools or use assets created by studios such as MacInnes Studio. They are also incentivised with some great prizes, generally involving something offered by the contest organizer, such as that by Kitbash3D we link to in this post. This week, we were however light on actual live contests to call out, but have found someone who is always in the know, Winbush!

Mission to Minerva (deadline 2 Dec 2022)

Kitbash3D’s challenge is for you to contribute to the development of a new galaxy! On their website, they state: ‘Your mission, should you choose to accept, is to build a settlement on a planet within the galaxy. What will yours look like?’ Their ultimate aim is to outsource all the creatie work to their community, combining artworks contests participants submit. There are online tutorials to assist, where they show you how to use Kitbash3D in Blender and Unreal Engine 5, and your work can be either concept are or animation. Entry into the contest couldn’t be simpler: you just need to share on social media (Twitter, FB, IG, Artstation) and use the hashtag #KB3Dchallenge. Winners will be announced on 20 December and there are some great prizes, sponsored by the likes off Unreal, Nvidia, CG Spectrum, WACOM, The Gnoman Workshop, The Rookies and ArtStation (platforms). Entry details and more info here.

Pug Forest Challenge

This contest has already wrapped – but there are now a few of this type of thing emerging – challenges which give you an asset to play with for a period of time, a submission guideline process, and some fabulous prizes – all geared towards incentivising you to learn a new toolset, this one being UE5! So if you need the incentivisation to motivate you – its definitely worth looking out for these. Jonathan Winbush is also one of those folks whose tutorials are legendary in the UE5 community, so even if you don’t want to enter, this is someone to follow.

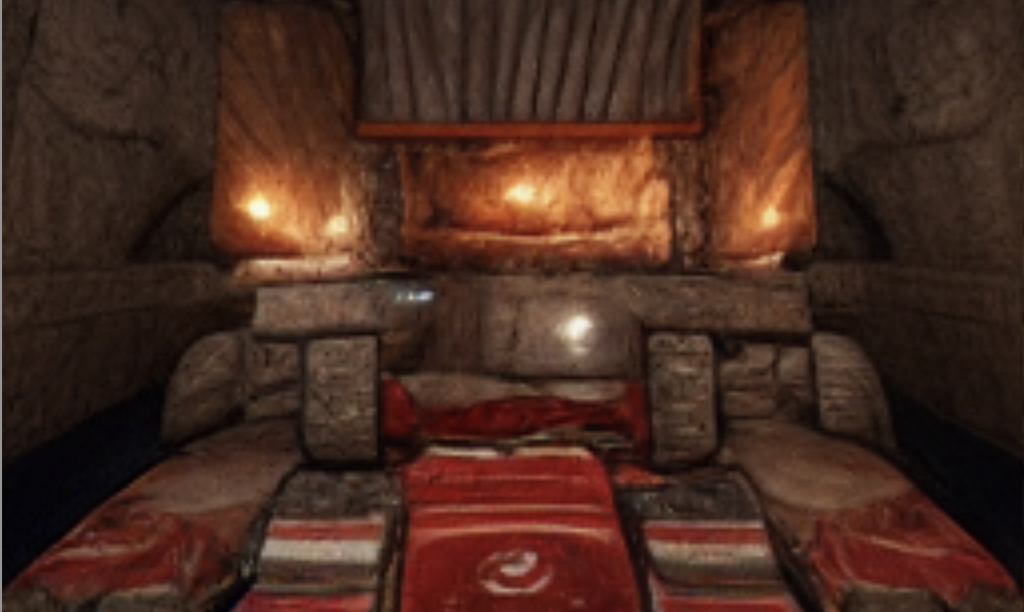

McInnes Studios’ Mood Scene Challenge

John McInnes recently announced the winners of his Mood Scene challenge contest that we reported on back in August – we must say, the winners have certainly delivered some amazing moods. Check the show reel out here –

Recent Comments