Tech Update 1: AI Generators (Jan 2023)

A month is apparently a VERY long time in the world of artificial intelligence… since our last post on this topic, released at the beginning of December, we’ve seen even more amazing techs launch.

ChatGPT

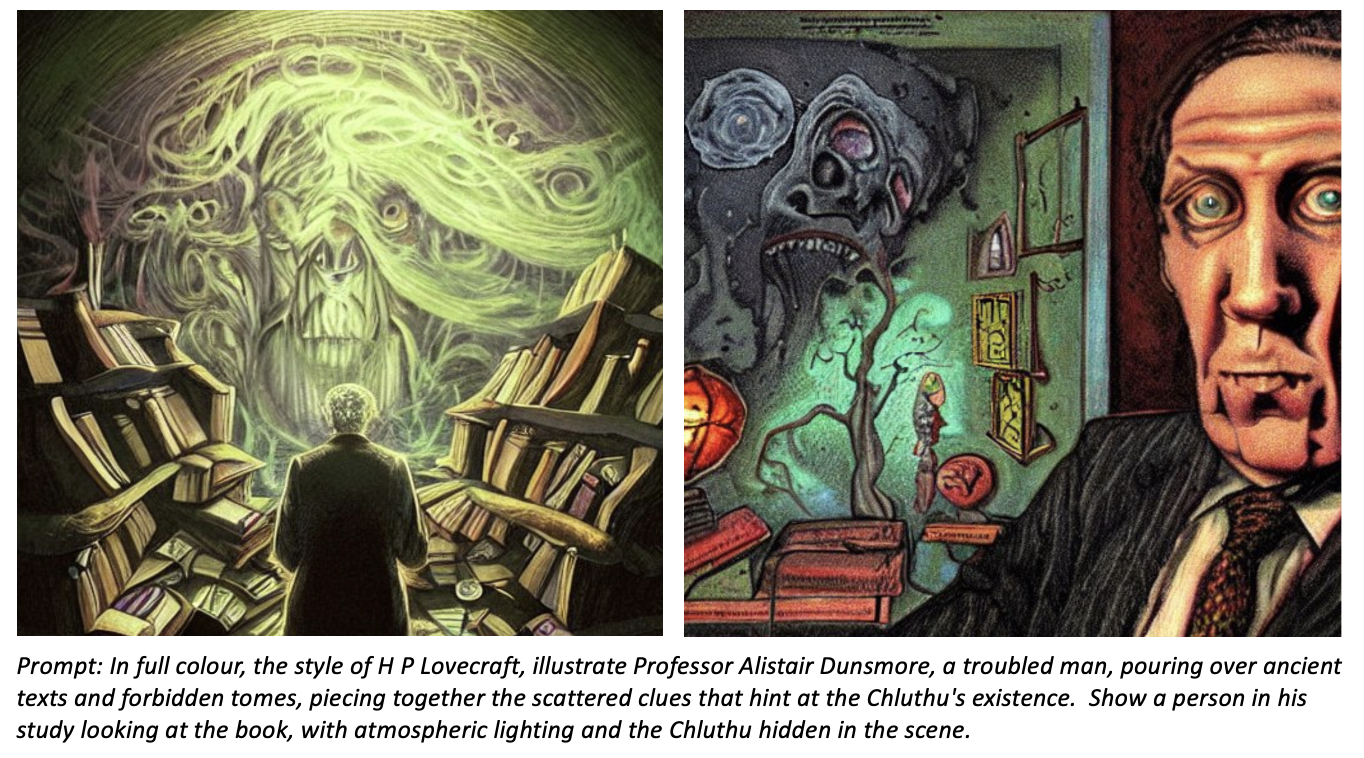

This is another creative AI from the OpenAI stable (also creator of DALL-E 2) that produces text in a conversational way. ChatGPT is capable of answering pretty much any question or request you care to throw at it, and the answers are actually quite robust if a little fuzzy around the edges at times (don’t rely on it for anything critical, in the words of Sam Altman, OpenAI CEO). Here’s an example I asked it for this post, using the prompt: ‘In the style of H P Lovecraft, write a description of a character for someone who hunts the Chluthu’

Response:

Professor Alistair Dunsmore was a man obsessed. For years, he had devoted his life to studying the mysteries of the cosmos, and in particular, the myth of the Chluthu.

A brilliant but troubled man, Dunsmore was driven by a need to uncover the truth about the terrifying creature that lurked beyond the veil of our known universe. He had spent years pouring over ancient texts and forbidden tomes, piecing together the scattered clues that hinted at the Chluthu’s existence.

And then, one fateful day, he discovered something that would change his life forever. A fragment of an ancient artifact, imbued with otherworldly powers, that seemed to hold the key to finding the Chluthu.

Dunsmore knew that he had to have it, no matter the cost. And so he set out on a quest to track down the artifact and unlock its secrets. He traveled to the far corners of the earth, braving dangers and braving the unknown, all in the pursuit of his ultimate goal.

Some say that Dunsmore was mad, driven to the brink by his obsession. But to those who knew him, he was a hero, a brave and brilliant man who dared to stare into the darkness and face the horrors that lay within.

That’s impressive – and it took just seconds to generate. It has great potential to be a useful tool for scriptwriting for stories and character development that can be used in machinima and virtual productions, and also marketing assets you might use to promote your creative works too!

And as if that isn’t useful enough, some bright folks have already used it to write a game and even create a virtual world. Note the detail in the prompts being used – this one from Jon Radoff’s article (4 Dec 2022) for an adventure game concept: ‘I want you to act as if you are a classic text adventure game and we are playing. I don’t want you to ever break out of your character, and you must not refer to yourself in any way. If I want to give you instructions outside the context of the game, I will use curly brackets {like this} but otherwise you are to stick to being the text adventure program. In this game, the setting is a fantasy adventure world. Each room should have at least 3 sentence descriptions. Start by displaying the first room at the beginning of the game, and wait for my to give you my first command’.

The detail is obviously the key and no doubt we’ll all get better at writing prompts as we learn how the tools respond to our requests. It is interesting that some are also suggesting there may be a new role on the horizon… a ‘prompt engineer’ (check out this article in the UK’s Financial Times). Yup, that and a ‘script prompter’, or any other possible prompter-writer role you can think of… but can it tell jokes too?

Give it a go – we’d love to hear your thoughts on the ideas it generates. Of course, those of you with even more flAIre can then use the scripts to generate images, characters, videos, music and soundscapes. There’s no excuse for not giving these new tools for producing machine cinema a go, surely.

Link requires registration to use (it is currently free) and note the tool now also keeps all of your previous chats which enables you to build on themes as you go: ChatGPT

Image Generators

Building on ChatGPT, D-ID enables you to create photorealistic speaking avatars from text. You can even upload your own image to create a speaking avatar, which of course raises a few IP issues, as we’ve just seen from the LENSA debacle (see this article on FastCompany’s website), but JSFILMZ has highlighted some of the potentials of the tech for machinima and virtual production creators here –

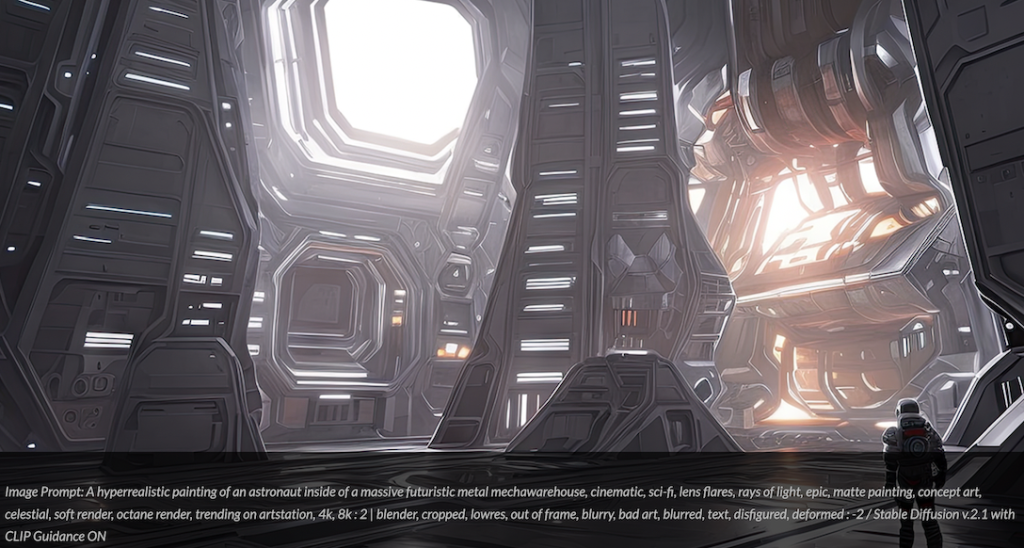

An AI we’ve mentioned previously, Stable Diffusion version 2.1 released on 7 December 2022. This is an image generating AI, its creative tool is called Dream Studio (and the Pro version will create video). In this latest version of the algorithm, developers have improved the filter which removes adult content yet enables beautiful and realistic looking images of characters to be created (now with better defined anatomy and hands), as well as stunning architectural concepts, natural scenery, etc. in a wider range of aesthetic styles than previous versions. It also enables you to produce images with non standard aspect ratios such as panoramics. As with ChatGPT, a lot depends on the prompt written in generating a quality image. This image and prompt example is taken from the Stability.ai website –

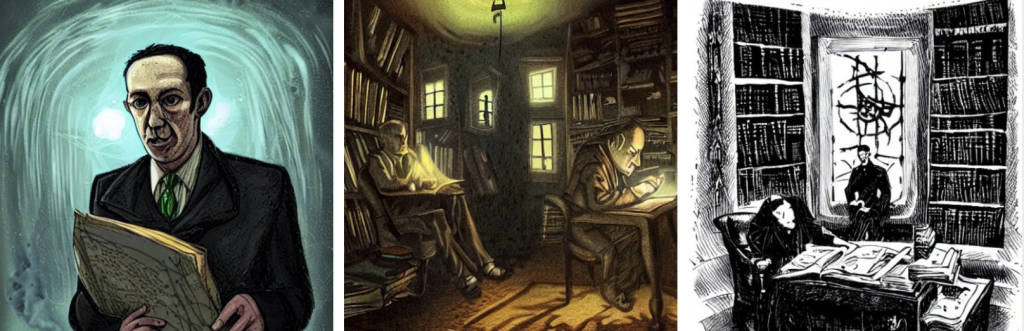

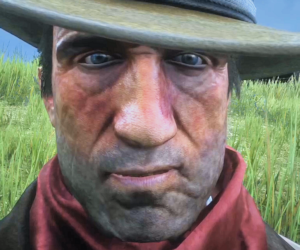

So, just to show you how useful this can be, I took some text from the ChatGPT narrative for our imaginary character, Professor Alistair Dunsmore, and used a prompt to generate images of what he might look like and where he might be doing his research. The feature images for this post are some of the images it generated – and I guess I shouldn’t have been so surprised that the character looks vaguely reminiscent of Lovecraft himself. The prompt also produced some other images (below) and all you need to do is select the image you like best. Again, these are impressive outputs from a couple of minutes of playing around with the prompt.

For next month, we might even see if we can create a video for you, but in the meantime, here’s an explainer of a similar approach that Martin Nebelong has taken, using MidJourney instead to retell some classic stories –

Supporting the great potential for creative endeavour, ArtStation has taken a stance in favour of the use of AI in generating images with its portfolio website (which btw was bought by Epic Games in 2021). This is in spite of thousands of its users demanding that it remove AI generated work and prevent content being scraped. This request is predicated on the lack of transparency used by AI developers in training and generating datasets. Instead, ArtStation has removed those using the Ghostbuster-like logo on their portfolios (‘no to AI generated images’) from its homepage and issued a statement about how creatives using the platform can protect their work. The text of an email received on 16 December 2022 stated:

‘Our goal at ArtStation is to empower artists with tools to showcase their work. We have updated our Terms of Service to reflect new features added to ArtStation as it relates to the use of AI software in the creation of artwork posted on the platform.

First, we have introduced a “NoAI” tag. When you tag your projects using the “NoAI” tag, the project will automatically be assigned an HTML “NoAI” meta tag. This will mark the project so that AI systems know you explicitly disallow the use of the project and its contained content by AI systems.

We have also updated the Terms of Service to reflect that it is prohibited to collect, aggregate, mine, scrape, or otherwise use any content uploaded to ArtStation for the purposes of testing, inputting, or integrating such content with AI or other algorithmic methods where any content has been tagged, labeled, or otherwise marked “NoAI”.

For more information, visit our Help Center FAQ and check out the updated Terms of Service.‘

You can also read an interesting article following the debate on The Verge’s website here, published 23 December 2022.

We’ve said it before, but AI is one of the tools that the digital arts community has commented on FOR YEARS. Its best use is as a means to support creatives to develop new pathways in their work. It does cut corners but it pushes people to think differently. I direct the UK’s Art AI Festival and the festival YouTube channel contains a number videos of live streamed discussions we’ve had with numerous international artists, such as Ernest Edmonds, a founder of the digital arts movement in the 1960s; Victoria and Albert Museum (London) digital arts curator Melanie Lenz; the first creative AI Lumen Prize winner, Cecilie Waagner Falkenstrom; and Eva Jäger, artist, researcher and assistant curator at Serpentine Galleries (London), among others. All discuss the role of AI in the development of their creative and curatorial practice, and AI is often described as a contemporary form of a paintbrush and canvas. As I’ve illustrated above with the H P Lovecraft character development process, its a means to generate some ideas through which it is possible to select and explore new directions that might otherwise take weeks to do. It is unfortunate that some have narrowed their view of its use rather than more actively engaged in discussion on how it might add to the creative processes employed by artists, but we also understand the concerns some have on the blatant exploitation of copyrighted material used without any real form of attribution. Surely AI can be part of the solution for that problem too although I have to admit so far I’ve seen very little effort being put into this part of the challenge – maybe you have?

In other developments, a new ‘globe’ plug-in for Unreal Engine has been developed by Blackshark. This is a fascinating world view, giving users access to synthetic 3D (#SYNTH3D) terrain data, including ground textures, buildings, infrastructure and vegetation of the entire Earth, based on satellite data. It contains some stunning sample sets and, according to Blackshark’s CEO Michael Putz, is the beginning of a new era of visualizing large scale models combined with georeferenced data. I’m sure we can all think of a few good stories that this one will be useful for too. Check out the video explainer here –

And Next…?

Who knows, but we’re looking forward to seeing how this fast action tech set evolves and we’ll be aiming to bring you more updates next month.

Don’t forget to drop us a line or add comments to continue the conversation with us on this.

Recent Comments