S4 E124 Machinima News Omnibus (Apr 2024)

A packed review this month, with some sad 🙁 better 😐 and happy news 🙂

YouTube Version of this Episode

Show Notes and Links

Endings

The long-anticipated demise of Rooster Teeth and has been announced – as has the much anticipated final season of Red Vs Blue in this trailer –

If you are a die hard RTer, here’s a link to the Rooster Teeth team response to Warner’s announcement, its ‘Not a Final Goodbye’ –

Tracy and Ben’s Pioneers in Machinima book chapter is here too, Chapter 4: Rooster Teeth Bites.

Here’s the link to the 2007 Special Ep, Going Global, released at the First European Machinima Festival in 2007 (in Leicester, UK) –

Articles, Variety, Deadline and IGN.

Another ending, Draxtor Dupres’ Second Life series supported by Linden Labs, Drax Files: World Makers. Here’s his final report and here’s a link to a livestream he did to celebrate the achievements of the show –

Accolades

Sam Crane’s Hamlet in GTA5 won a Jury prize for best documentary at SXSW 2024, link to news coverage here, and the distribution deal awarded here.

Electro League and Weta’s War is Over has become the first UE5 film to win an Oscar, Best Animated Short –

Aye AI AI…

YouTube has updated its T&Cs, a nice article here

ConvAI has announced a partnership with Unity for NPCs, and also teamed up with Second Life. Here’s a link to Wagner James Au’s New World Notes blog – and here’s a couple of useful links –

Stability AI has introduced a 3D video generator, information here

RunwayML has partnered with Musixmatch to generate video to lyrics, link to information here

and it has also announced a new lipsync feature for generative audio, currently on early access to its creators programme members

Hume has a demo of a voice-to-voice generator, described as an Empathic Voice Interface – link to sign-up for early access here

and if you need a little overview of how all things generative AI are developing, here’s a nice video summary by Henrik Kniberg –

Inspiration

To celebrate the phenomenal success of Gozilla Minus One at the 2024 Oscars, Nelson Escobar, the VFX creator of the Godzilla model, has released it as a free download for Blender. Link here –

Blockbuster Inc has announced its release date: 6 June 2024. Here’s the official gameplay trailer –

and here’s the link to Prologue on Steam

Finally, here’s the link to the interview with Denis Villeneuve, discussing his approach to making the latest Dune films –

Post Script

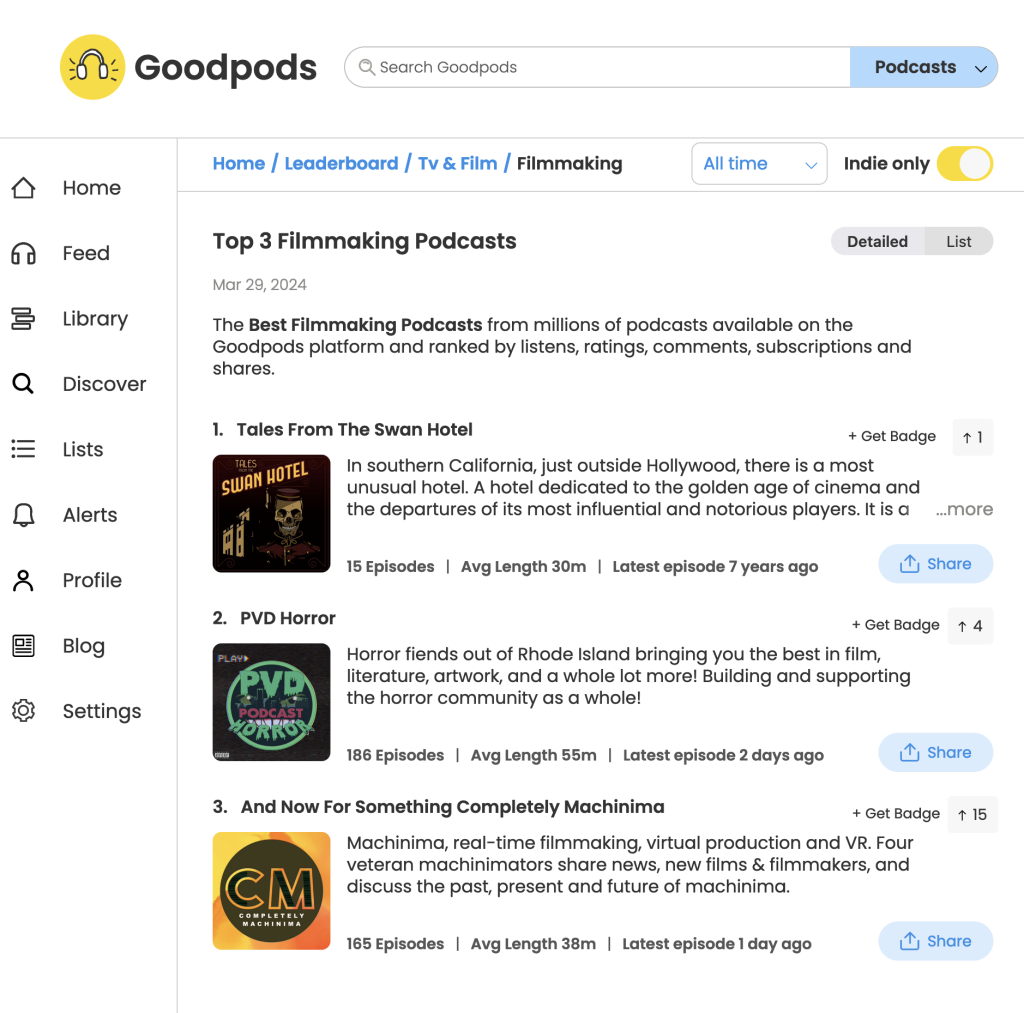

We are super excited to see our Podcast make the Top 3 listing of indie filmmaking podcasts on Goodpods in March 2024 – how cool is that?!

Recent Comments