Tech Update 1: AI Generators (Apr 2023)

March was another astonishing month in the world of AI genies with the release of exponentially powerful updates (GPT4 released 14 March; Baidu released Ernie Bot on 16 March), new services and APIs. It is not surprising that by the end of the month, Musk-oil is being poured over the ‘troubling waters’ – will it work now the genie is out of the bottle? Its anyone’s guess and certainly it seems a bit of trickery is the only way to get it back into the bottle at this stage.

Rights

More importantly, and with immediate effect, the US Copyright Office issued a statement on 16 March in relation to the IP issues that have been hot on many lips for several months now: registrations pertaining to copyright are about the processes of human creativity, where the role of generative AI is simply seen as a toolset under current legal copyright registration guidance. Thus, for example, in the case of Zarya of the Dawn (refer our comments in the Feb 2023 Tech Update), whilst the graphic novel contains original concepts that are attributable to the author, the use of images generated by AI (in the case of Zarya, MidJourney) are not copyrightable. The statement also makes it clear that each copyright registration case will be viewed on its own merit which is surely going to make for a growing backlog of cases in the coming months. It requires detailed clarification of how generative AI is used by human creators in each copyright case to help with the evaluation processes.

The statement also highlights that an inquiry into copyright and generative AIs will be undertaken across agencies later in 2023, where it will seek general public and legal input to evaluate how the law should apply to the use of copyrighted works in “AI training and the resulting treatment of outputs”. Read the full statement here. So, for now at least, the main legal framework in the US remains one of human copyright, where it will be important to keep detailed notes about how creators generated (engineered) content from AIs, as well as adapted and used the outputs, irrespective of the tools used. This will no doubt be a very interesting debate to follow, quite possibly leading to new ways of classifying content generated by AIs… and through which some suggest AIs as autonomous entities with rights could become recognized. It is clear in the statement, for example, that the US Copyright Office recognizes that machines can create (and hallucinate).

The complex issues of the dataset creation and AI training processes will underpin much of the legal stances taken and a paper released at the beginning of Feb 2023 could become one of the defining pieces of research that undermines it all. The research extracted near exact copyrighted images of identified people from a diffusion model, suggesting that it can lead to privacy violations. See a review here and for the full paper go here.

In the meantime, more creative platforms used to showcase creative work are introducing tagging systems to help identify AI generated content – #NoAI, #CreatedWithAI. Sketchfab joined the list at the end of Feb with its update here, with updates relating to its own re-use of such content through its licensing system coming into effect on 23 March.

NVisionary

Nvidia’s progressive march with AI genies needs an AI to keep up with it! Here’s my attempt to review the last month of releases relevant to the world of machinima and virtual production.

In February, we highlighted ControlNet as a means to focus on specific aspects of image generation and this month, on 8 March, Nvidia released the opposite which takes the outline of an image and infills it, called Prismer. You can find the description and code on its NVlabs GitHub page here.

Alongside the portfolio of generative AI tools Nvidia has launched in recent months, with the advent of OpenAI’s GPT4 in March, Nvidia is expanding its tools for creating 3D content –

It is also providing an advanced means to search its already massive database of unclassified 3D objects, integrating with its previously launched Omniverse DeepSearch AI librarian –

It released its cloud-based Picasso generative AI service at GTC23 on 23 March, which is a means to create copyright cleared images, videos and 3D applications. A cloud service is of course a really great idea because who can afford to keep up with the graphics cards prices? The focus for this is enterprise level, however, which no doubt means its not targeting indies at this stage but then again, does it need to when indies are already using DALL-E, Stable Diffusion, MidJourney, etc. Here’s a link to the launch video and here is a link to the wait list –

Pro-seed-ural

A procedural content generator for creating alleyways has been released by Difffuse Studios in the Blender Marketplace, link here and see the video demo here –

We spotted a useful social thread that highlights how to create consistent characters in Midjourney, by Nick St Pierre, using seeds –

and you can see the result of the approach in his example of an aging girl here –

Animation

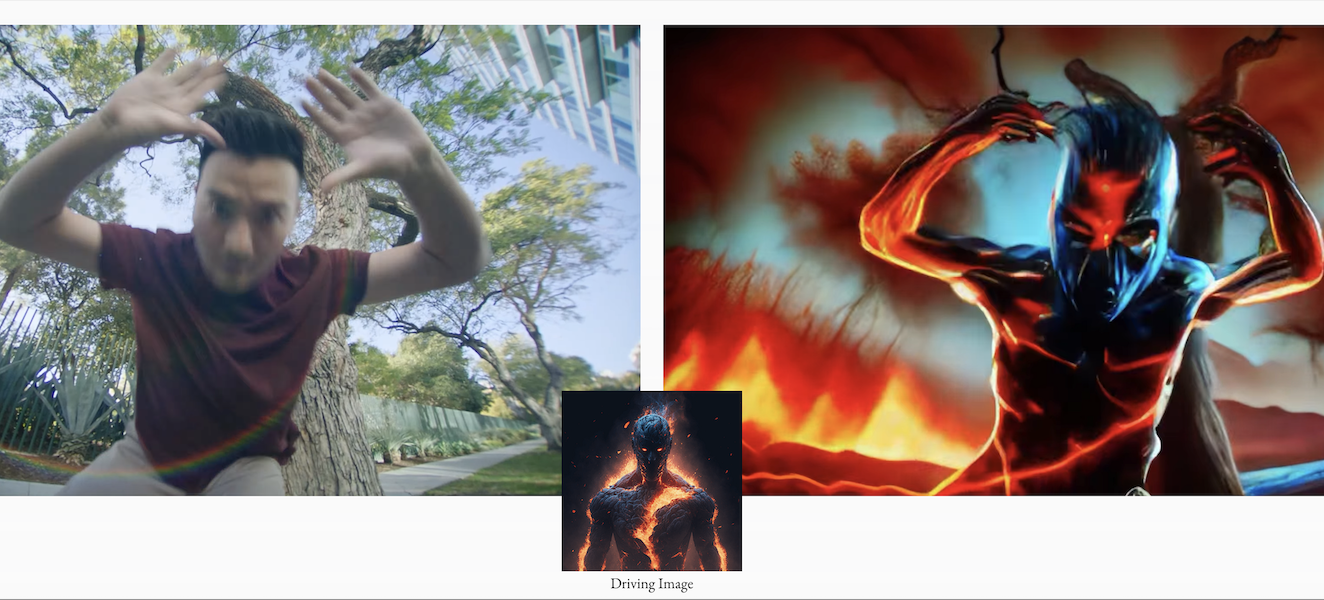

JSFilmz created an interesting character animation using MidJourney5 (which released on 17 March) with advanced character detail features. This really shows its potential alongside animation toolsets such as Character Creator and Metahumans –

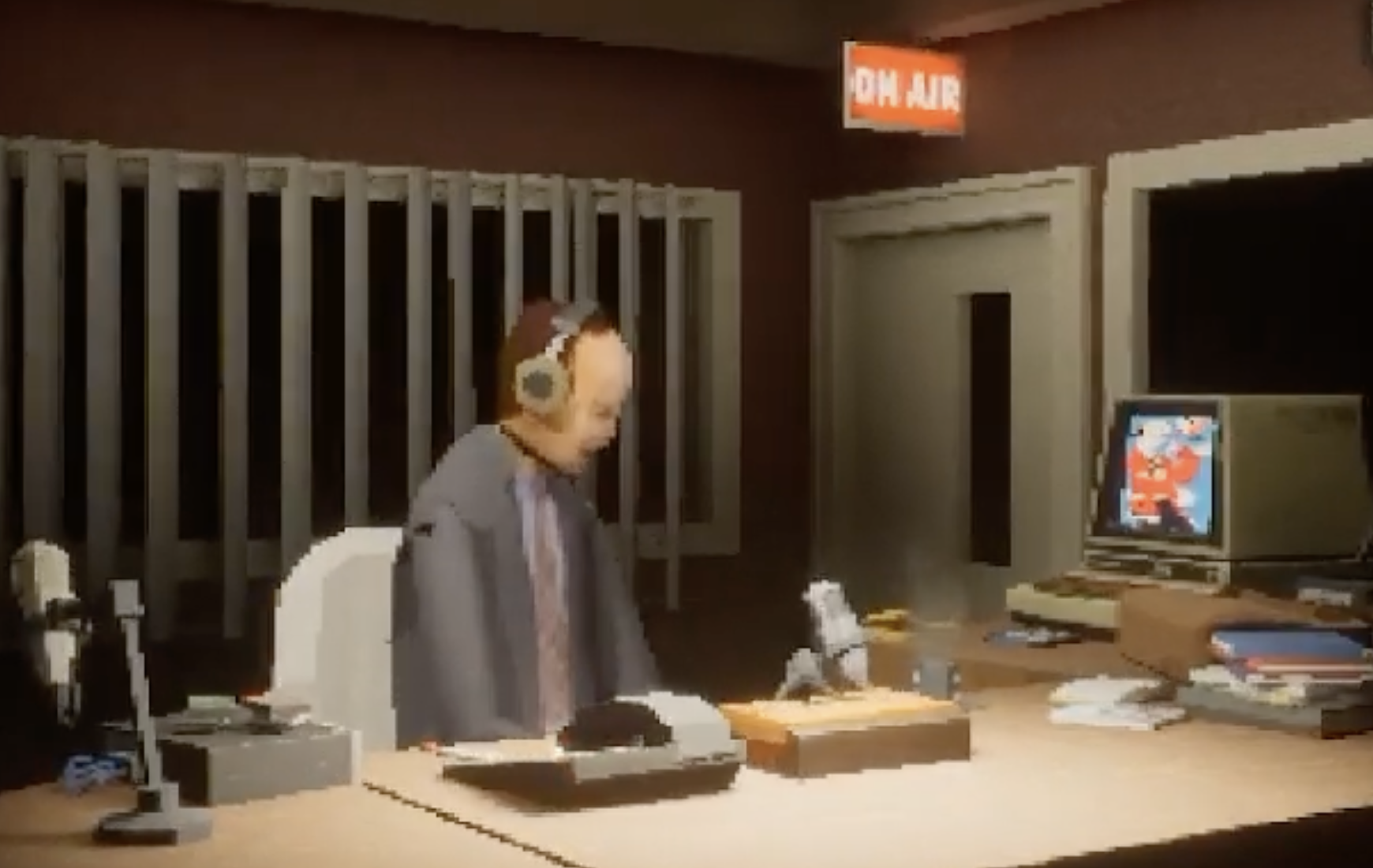

Runway’s Gen-2 text-to-video platform launched on 20 March, with higher fidelity and consistency in the outputs than its previous version (which was actually video-to-video output). Here’s a link to the sign-up and website, which includes an outline of the workflow. Here’s the demo –

Gen-2 is also our feature image for this blog post, illustrating the stylization process stage which looks great.

Wonder Dynamics launched on 9 March as a new tool for automating CG animations from characters that you can upload to its cloud service, giving creators the ability to tell stories without all the technical paraphenalia (mmm?). The toolset is being heralded as a means to democratize VFX and it is impressive to see that Aaron Sims Creative are providing some free assets to use with this and even more so to see none other than Steven Spielberg on the Advisory Board. Here’s the demo reel, although so far we’ve not found anyone that’s given it a full trial (its in closed beta at the moment) and shared their overview –

Finally for this month, we close this post with Disney’s Aaron Blaise and his video response to Corridor Crew’s use of generative AI to create a ‘new’ anime workflow, which we commented on last month here. We love his open-minded response to their approach. Check out the video here –

Recent Comments