Tech Update 1 (Nov 2022)

Hot on the heels of our discussion on AI generators last week, we are interested to see tools already emerging that turn text prompts into 3D objects and also film content, alongside a tool for making music too. We have no less than five interesting updates to share here – plus a potentially very useful tool for rigging the character assets you create!

Another area of rapidly developing technological advancements is mo-cap, especially in the domain of markerless which lets face it is really the only way to think about creating naturalistic movement-based content. We share two interesting updates this week.

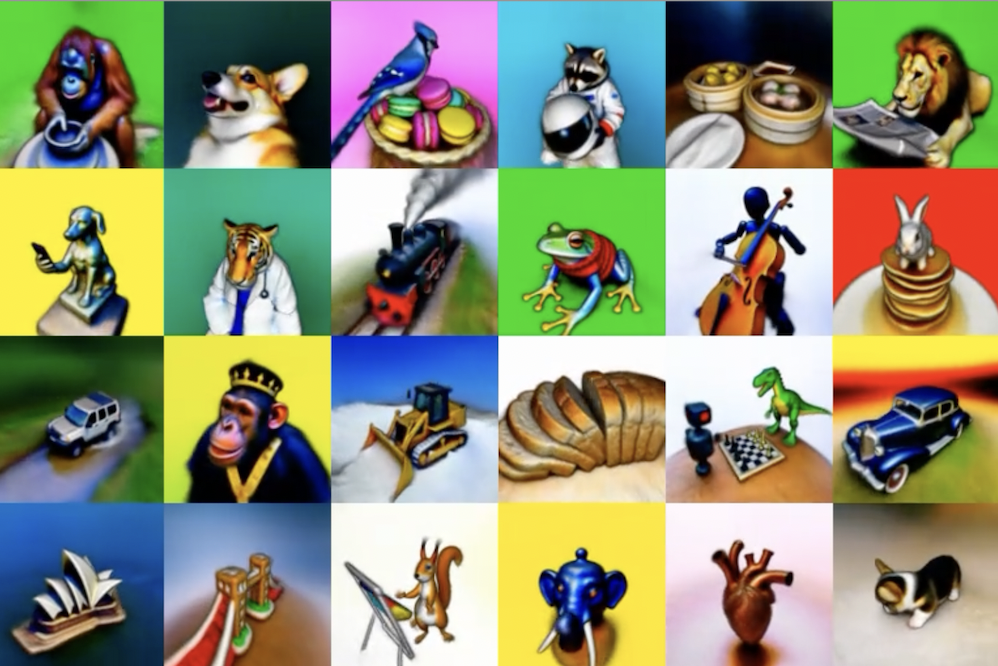

AI Generators

Nvidia has launched an AI tool that will generate 3D objects (see video). Called GET3D (which is derived from ‘Generate Explicit Textured 3D meshes’), the tool can generate characters and other 3D objects, as explained by Isha Salian on their blog (23 Sept). The code for the tool is currently available on Github, with instructions on how to use it here.

Google Research with researchers at the University of California, Berkeley are also working on similar tools (reported in Gigazine on 30 Sept). DreamFusion uses NeRF tech to create 3D models which can be exported into 3D renderers and modeling software. You can find the tool on Github here.

Meta has developed a text-to-video generator, called Make-A-Video. The tool uses a single image or can fill in between two images to create some motion. The tool currently generates five second videos which are perfect for background shots in your film. Check out the details on their website here (and sign up to their updates too). Let us know how you get on with this one too!

Runway has released a Stable Diffusion-based tool that allows creators to switch out bits of images they do not like and replace them with things they do like (reported in 80.lv on 19 Oct), called Erase and Replace. There are some introductory videos available on Runway’s YouTube channel (see below for the Introduction to the tool).

And finally, also available on Github, is Mubert, a text-to-music generator. This tool uses a Deforum Stable Diffusion colab. Described as proprietary tech, its creator provides a custom license but says anything created with it cannot be released on DSPs as your own. It can be used for free with attribution to sync with images and videos, mentioning @mubertapp and hashtag #mubert, with an option to contact them directly if a commercial license is needed.

Character Rigging

Reallusion‘s Character Creator 4.1 has launched with built in AccurRIG tech – this turns any static model into an animation ready character and also comes with cross-platform support. No doubt very useful for those assets you might want to import from any AI generators you use!

Motion Capture Developments

That every-ready multi-tool, the digital equivalent of the Swiss army knife, has come to the rescue once again: the iPhone can now be used for full body mocap in Unreal Engine 5.1, as illustrated by Jae Solina, aka JSFilmz, in his video (below). Jae has used move.ai, which is rapidly becoming the gold standard in markerless mocap tech and for which you can find a growing number of demo vids showing how detailed movement can be captured on YouTube. You can find move.ai tutorials on Vimeo here and for more details about which versions of which smart phones you can use, go to their website here – its very impressive.

Another form of mocap is the detail of the image itself. Reality Capture has launched a tool that you can use to capture yourself (or anyone else or that matter, including your best doggo buddy) and use the resulting mesh to import into Unreal’s MetaHuman. Even more impressive is that Reality Capture is free, download details from here.

We’d love to hear how you get on with any of the tools we’ve covered this week – hit the ‘talk’ button on the menu bar up top and let us know.

Recent Comments